We had a bit of a “domestic energy” focus over the weekend, whilst the mercury climbed.

Shopping around

I lost a few hours “shopping around” again to see if there were cheaper options for gas at home.

That’s time I’ll never get back, getting into the detail of daily charges and (with some retailers) declining block tariffs for what seemed, at the end, to offer about $40 cost saving on a $1000 annual spend. A pretty low hourly rate ROI (not even counting going through the transfer process), and time I won’t get back – but that’s food for another post.

Also on the weekend, we replaced another LED downlight in our house as it had decided it did not want to work any more.

This is becoming quite an annoying practice, because:

1) we have something like 63 of these in ceilings through the house – some in quite hard to access locations, and sprung-loaded in ceilings that are not going to take kindly to repeated prizing out for replacement (i.e. we need to replace the whole light fitting, rather than just a bulb);

2) part of the justification for installing them was the promise that they would “last for 10 years”, and yet I have already replaced 10-20 of them over the 5-year period from 2013 to 2018 (hence I feel like we’ve been sold a dud on the longevity front);

3) I do miss the simplicity of the bayonet/screw style replacement of the old light bulbs, and really don’t fancy having to do this kind of thing on the current frequency (or higher) into the future…

This irritation (and curiosity sparked by Tristan’s article last week here and elsewhere – and some reaction to it) prompted me to dive into my Solar Analytics account for our residential rooftop PV power station (see earlier thoughts posted here a year ago) in order to access measured voltage levels at home.

Knowing that we were experiencing problems with blown LEDs I had opted for the higher subscription level from Solar Analytics because:

1) I had been told by a number of electrically-minded people that they suspected high voltage as the reason our lights are blowing; and

2) Our solar, and that from neighbouring houses, might be a driver for higher voltages;

3) It’s been stated elsewhere that local DNSPs (Energex, in our case) don’t have much visibility of what happens in their network below the zone substation; hence

4) I wanted to access a trend of voltage over time in order that I could see what it actually has been – and then

5) From this basis, help me understand if there was something we could do to significantly reduce the expiration rate of LEDs.

In this brief post, I take the analysis as far as I am able (technically, I’m out of my depth here) in the hope that some more knowledgeable readers can help me out by helping me understand – with an explanation that’s low on the lingo, and also minimally influenced by either extreme of the Emotion-o-meter.

My Solar Analytics account allows me to download data by month, so I grabbed what was there for October 2018 – being the most recent one, and with the latest LED having gone kaput during the month (I think it was 20th or 21st October – it was certainly at night).

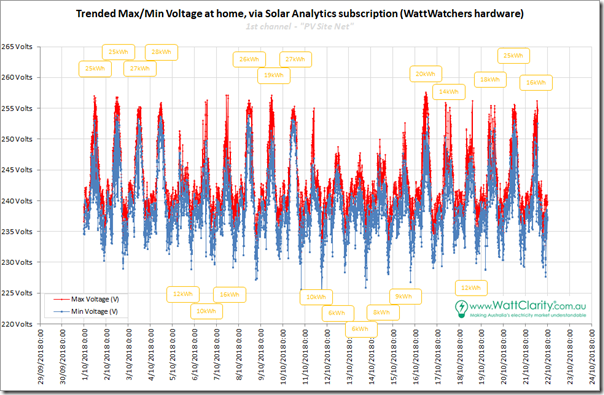

The file I downloaded had data for 6 different “channels” (not sure if that is the correct term), with MIN and MAX voltage supplied for each of them. Not knowing any better, I chose the 1st channel (which our helpful installer at Positronic had labelled “PV_Site_Net”), and trended both MIN and MAX voltages over the period up until the end of Sunday 21st October:

Because I was interested in the correlation between local voltage and solar output, I have also annotated the chart with the daily production from our PV array.

With a mental note to self that “correlation does not necessarily mean causation”, there does seem to be a fair degree of correlation between solar output and local voltage – as in:

1) Voltage consistently seems to dip overnight and rise through the day; and

2) Days where solar output was highest seem to be days where (both MAX and MIN) voltage trended highest during daylight hours.

What I don’t know are the following:

Question 1 = does higher solar output drive voltage higher?

Question 2 = what’s the voltage supposed to be?

i.e. I have had 240V stuck in my head from decades ago, but Tristan’s article (which references this University of NSW conference paper) suggests that voltages in Queensland were supposed to be lowered to 230V

Question 3 = does higher voltage have implications for applicances at home (and, in particular, with my failing LEDs)?

Question 4 = if so, what can I do about this?

I’ll look forward to hearing back from those who can help me with this

3. 230/460 volts (single phase) with an allowable margin of +10/-6 per cent (between 216 and 253).

http://www.parliament.qld.gov.au/Documents/TableOffice/TabledPapers/2018/5618T56.pdf

Paul, can I suggest that in the case of blown LEDs, you attempt to get the temperature in the suspect downlight fittings, and compare that to lights that work fine? Voltage will affect all lights equally (and any other electrical appliances), while temperature and moisture can affect individual fittings separately.

I have had combinations of flouros and LEDs for years with no issues, and my power sockets read 245V pretty regularly (I have 3kW solar panels and am about 50m from a pad mount substation).

I recently changed 8 flouro donwlights to LED (Edison thread) and they are all fine, except for one that lasted 1hr and went kaput. I put this down to a manufacturing fault, since any other bulb works fine in that socket.

I have found that temperature, moisture, vermin, dirt and build quality have played a greater role in bulb life at my place than voltage.

Your general quest seems to be to understand more about power quality in general, in which case there are some terms that you might need to understand in more detail:

– flicker, unbalance, harmonics, overvoltage, undervoltage – there is more than just voltage, and any number of IEEE articles discussing the power quality effects of solar inverters and similar non-linear power electronic devices.

Q1. Does solar output drive voltage higher – yes! I visualise this by thinking about the situation with no solar – all the power is coming from a fleet of generators far away, with associated losses and voltage drop. With solar being exported locally you are effectively reducing the load and the losses, so the voltage increases.

Q2. Voltage is supposed to be 230V +10% -6%, but its a big deal to change all the off-load tap changers in distributed transformers in all the suburbs… takes time and coordination and priorities

Q3. Implications for equipment – see above discussion on power quality and local conditions separate to voltage

Q4. You can buy separate power metering devices that plug into a power socket, gathering information might be the first step.

There is a lot to unpack in these questions.

A1) Voltage rise during load is a characteristic of Line Drop Compensation for customers at the front end of the 11kV feeder. Wikipedia has a good article on LDC and associated problems due to distribution generation. https://en.wikipedia.org/wiki/Voltage_regulation I strongly suggest reading this.

A2) While some claim that the 230V standard was introduced to “allow more solar” this is a furphy. As a matter of fact, the 230V standard was introduced back in the year 2000 (well before widespread adoption of PV). It was actually introduced to facilitate more voltage drop along the feeder, to squeeze more out of existing electrical assets. The old 240V standard (only recently superseded) was 240V +/-6%. This makes the voltage range from 254.4V down to 226.4V. The 230V standard has a range of +10% to -6%. This makes the voltage range from 253V down to 217V. As you can see, the upper limit hardly changes (1.4V), yet the lower limit drops considerably (9.4V). To be more specific, the system voltage has to be below the upper limit 99% of the time, and above the lower limit 99% of the time. If it is outside these limits, then complain to Energex. They are generally very responsive.

A3) Maybe. But there are a multitude of factors. As Ben has pointed out, temperature, moisture, vermin, dirt, and build quality all play a part.

A4) I would start with ensure adequate ventilation around the LED bulb and buy good quality components.

Just some other points;

Energex has been installing power quality metering equipment on the 11kV/415V transformers for quite a while now. I would suggest reading their public domain document titled, “Power Quality Augex Forecast 2015/20”. It is very detailed and also addresses some of your specific concerns in the article.

The old BC/ES fittings have significant safety issues. A child or adult can readily put their finger onto energised terminals. The newer GU10 and/or 12V screw fittings are much safer.

Hi Paul, I’m suspecting that your down lights aren’t on when the sun is out, so a higher voltage due to solar output is unlikely to be the issue. Heat build up during operation on the other hand would be, as Ben suggests. I’ve had some flush fitting down lights now for a few years and not a single one has failed to date. I suspect it’s because the switch mode power supplies are separate to the lights themselves and live on top of the ceiling insulation. They were about $100 for a box of ten from ebay, great value! The previous LED’s they replaced were bulb type lamps installed in can type down lights and they got as hot as Hades and failed regularly. LED bulbs fitted to open holders on the other hand have been fine.

This from DMRE explains in detail all about the voltage settings, history, reasons and future.

https://www.dnrme.qld.gov.au/__data/assets/pdf_file/0005/1279571/decision-ris-qld-statutory-voltage-limits.pdf

References 95-100 in our paper related to the issue of voltage control at distribution level with rising PV penetration. They are a little dated now, however it is indeed an issue, and one that has been on some radars for a while. https://www.researchgate.net/publication/315745952_Burden_of_proof_A_comprehensive_review_of_the_feasibility_of_100_renewable-electricity_systems#pfb

Great use of Solar Analytics data, Paul. I couldn’t help but dig a bit further. I’ll send you some more info.

For the moment, on question 1:

Yes, PV generation will correlate with grid voltage to some extent. Your inverter needs to raise the local voltage in order to push current back upstream towards the transformer. How much depends on what else is happening on your local feeder. So if you and your neighbours are all generating lots of energy, voltage will go up but if you are all consuming lots, it will go down.

You’re seeing some pretty massive swings throughout the day, so I’m guessing you are towards the end of a feeder with plenty of solar on it.

Possible solutions:

1. Balance the generation. You’re on three phase, so a three-phase inverter might have helped reduce the voltage rise on any particular phase. I also see that the voltage on one of the other phases is much lower, so putting the inverter on that phase would even things out a bit. In these cases, some installers put Solar Analytics on before they install the system so they can find the phase with the lowest voltage.

2. Reactive Power Control. Qld now requires power factor of 0.9 lagging to “consume” reactive power, which helps to limit voltage rise. This doesn’t affect your real power generation unless your inverter is maxed out. Perhaps check if your installer can enable a reactive power setting on your inverter.

3. Load shifting. Balancing generation and demand at local level helps to balance voltage and increase the amount of solar that the network can handle. We’re working on a virtual peer-to-peer product to help non-solar customers participate in that load-shifting. Most networks also have demand response trials but they need to get a move on.

4. Tap change. As noted by others, the standard is now 230V +10%/-6%. It looks like they could easily go down 5-10V. It is a tough gig as a DNSP though as they have so little visibility and these changes cost money. We’re trying to help as our database grows with loads of useful data like you’ve demonstrated.

I would like to know from someone who writes here, can solar from a house go into the grid, and back through a transformer? to say the next area and then to another house. Or does it have to stay on the transformer supply side?

In short, yes. The transformer will change the voltage (and therefore current) but the power will flow throughout the grid to wherever it’s required. Generation and Consumption need to be balanced across the grid as a whole (e.g. the NEM connecting Qld-NSW-Vic-SA-Tas) otherwise the frequency will rise or fall accordingly.

So solar power from a roof can go all the way back to say a main generation point, being stepped up?. Lets say the Local Wind farm that is for this example say 100K away?

Yes. Electricity will always flow from a point of higher voltage to lower voltage. Solar inverters push power into the network by injecting it at a voltage slightly higher than what it’s connected to. Hence the topic of the article.

Transformers don’t care which way power flows. Either direction is fine, although because they were designed for one direction, they may be a little more efficient in that direction.

There are a number of feeders (11kV lines from sub-stations) that already go to net positive areas. Currimundi for example, as a suburb has periods of good solar that generates more power than it consumes and that power goes back to the sub-station and out to another feeder.

Technically, there is nothing wrong with all of a sub-stations feeders being net positive and the whole sub-station would push power further up the transmission network. So long as voltage levels throughout are within acceptable range, all is fine.

Thanks for sharing, Paul.

Your plot shows a very large difference between day-time voltages and night-time voltages. It made me wonder how many sites have a similar range so I ran some numbers and found that you are indeed at the upper end. I’ll send you some more data.

As far as you emotion-O-meter goes, I’m aware of at least 5 organisations that have made some kind of detailed study of 100% RE and one of these organisations keeps the lights on 99.998% of the time for the NEM, the AEMO. Are you suggesting BZE, UNSW academics, AEMO, SEN, UTS for GetUp! are all “loopy left” organisations and not competent at engineering analysis and modelling?

Trying to claim credibility by asserting one is part of a “sensible centre” is nothing more than rhetorical spin. I could deconstruct the “sensible centre” concept if you wish but my point is more about you positioning 100% RE (or say 95% RE for that matter) as some kind of unrealisable ideal only subscribed to by hippies who don’t know what they are talking about.

Alastair Leith

Outreach Coordinator

Sustainable Energy Now

As an electrical engineer who repairs electronics for a living, I can tell you that it is mainly the temperature of the device (or rather, inside it) and hence the temperature of the components that negatively affects its life.

Specifically, in Switched-Mode Power Supplies, the Capacitors are rated in hours at a particular temperature, for example, 2000 HRS @85 deg C. If the temperature in the device is 85 degrees, then it can be expected that the capacitor will be past its useful life in 2000 hours. This is under a year if the light is on for 8 hours a day.

However, if the temperature is reduced significantly, then the capacitor will last much longer.

The amount of Switched Mode Power Supplies I have repaired with failed capacitors is countless, and in larger devices it is usually a small 10uF 50v cap that goes, and this is used to filter the power to the SMPS control chip.

Whenever I replace them I try to install 10000 HR @ 105 deg C rated capacitors for longer life, if available in the size and voltage required.

In an LED downlight, some are direct plug in 12v versions, while others have their own 240v SMPS to enable dimming, and the old transformer style power supply is replaced.

However, it’s usually the direct plug in 240v ones (I.e bayonet cap / edison screw) that fail because they have to pack in the power supply, the LED with its heatsink on top, all into a small area with barely if any ventilation!

At home, we have had a few seperate power supply type downlights fail, however they were just pushed into the roof space, and both failed ones happened to be covered in insulation – further evidence that keeping the temperature down is key to long life of any device that relies on electrolytic capacitors.

Most mains rated equipment generally has a safety margin for incoming voltage, and although a higher voltage will also increase aging in the capacitors, the heat is the main cause of rapid aging.